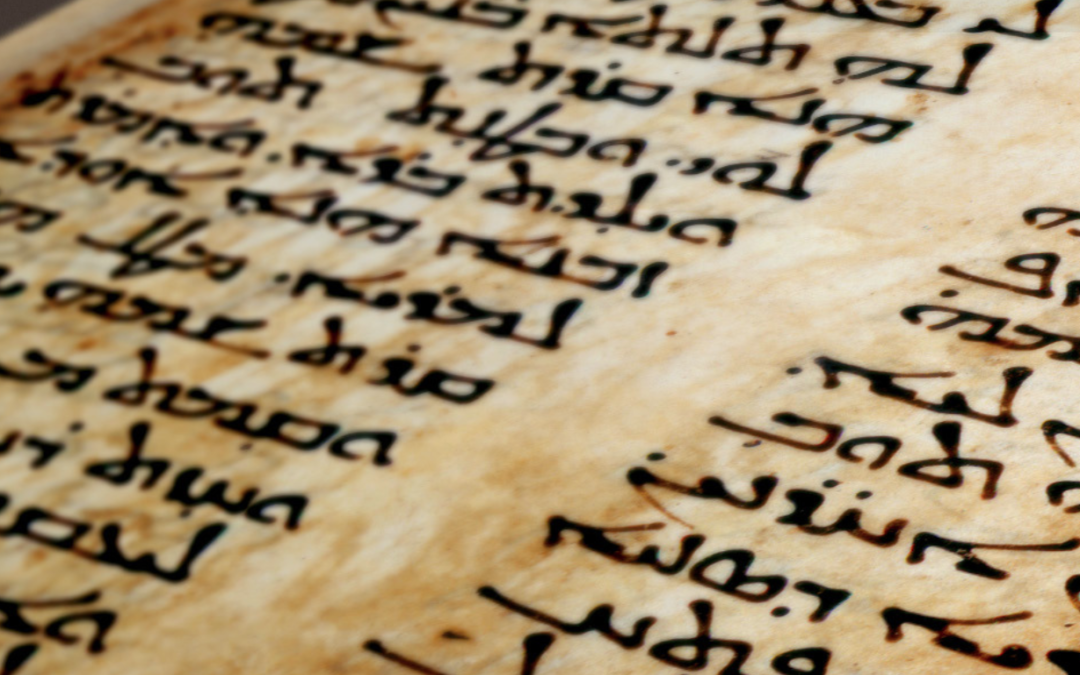

This very first blogpost seeks to report on our advancements in the PaTraCoSy (PAtterns in TRAnslation: Using COlibriCore for the Hebrew Bible corpus and its SYriac translation) project, which is funded by CLARIAH. The overall goal of this project is to use the Colibri Core environment to detect translation patterns between the Hebrew Bible and the Peshitta, its Syriac translation, thus providing a follow-up of the CLARIAH research pilot Linking Syriac Data. The richly annotated linguistic database of the Hebrew Bible has been created over a period of almost four decades (1977–2017). Thanks to a CLARIN-NL project (2013–2014), it has been made available through the SHEBANQ website, besides its presence on github as the BHSA. An electronic representation of the ancient Syriac translation of this text, called the Peshitta is also produced and maintained by the ETCBC. Modifications of this corpus were made in the CLARIAH research pilot Linking Syriac Data (2017–2018; see final report and github repository). Some encoded texts (Kings, Psalms 1–30 and others) are linguistically annotated in a way similar to the BHSA.

ColibriCore is a CLARIAH tool developed by Van Gompel et al. in the scope of his PhD research project. In Van Gompel’s dissertation, ColibriCore is used in automatic translation and word sense disambiguation based on context-sensitive suggestions for translations from one language into another. This is an interesting case in relation to the Bible, because Bible Translation and Machine Translation have been allies that need each other and reinforce each other: the Bible providing a huge parallel corpus in a few thousand language for Machine Translation, Machine Translation being the most advanced means to support and speed up Bible translation project (cf. e.g. Arvi Hurskainen, “Can machine translation assist in Bible translation?”

In this CLARIAH Fellowship, however, we want to experiment from the opposite starting point, not as a tool to create translations, but rather starting from existing translations. Using the n-gram, skipgram and flexgram search capabilities of ColibriCore, we can track significant word groups and their translations in parallel. We are mainly interested in two questions. 1. Do highly divergent translation patterns reflect specific syntactic differences? This question can be answered by comparing the ColibriCore output to annotations in the ETCBC database. 2. Which higher order n-gram receives a significantly other translation than its constituent parts? Answers to this question will describe patterns found in the translation of fixed expressions and other non-compositional structures. An interesting case is the ancient Syriac Bible translation, because Hebrew and Syriac are cognate languages, and yet have each their own structure. Can we find corresponding patterns between the Hebrew text and its Syriac translation, both at lexeme level and at phrase parsing level? In order to answer these questions, we first have to prepare our data and perform word and phrase alignments in order to be able to use ColibriCore.

The results discussed in this blogpost can be found in our GitHub repository (https://github.com/ETCBC/PaTraCoSy). In the directory ‘Genesis Alignment’, all files necessary to find word and phrase alignments between the Hebrew and Syriac books of Genesis can be found. The word and phrase alignments can be found among the .txt files, where ‘actual.ti.final’ contains the word alignment, and ‘AA3.final’ the phrase alignment.

In order to perform word and phrase alignment according to respectively Och and Ney (2003) and Koehn (2009), we implement the Giza++ library from the Moses-SMT Github page (https://github.com/moses-smt/giza-pp). Using the plain2snt.out command, we first created vocabulary files for both Hebrew and Syriac, based on the input texts from the book of Genesis. The .vcb extension marks these files. Once we have these files, we can use them in the snt2cooc.out command to generate a co-occurrence file, which is given the extension .cooc. This file in turn then is necessary to use the main Giza++ command, in order to construct the alignment files.

As we have described above, we assume Hebrew to be the source language, and Syriac the target. The file ‘actual.ti.final’ describes the word alignment, where the first word is the Syriac target, followed by a Hebrew source word and the alignment score based on the Viterbi algorithm.

The file ‘AA3.final’ contains the sentence alignments, which consists of three lines of information. The first contains the alignment score, once again based on the Viterbi algorithm. The second line contains the Syriac target sentence, and the third the Hebrew source sentence. This final line always starts with NULL({}), where words that did not receive an alignment are placed in between the curly brackets. This means that whenever no word is found here, all words are aligned to a target word or word group. An easy example can immediately be found in Genesis 1,1, where every source word stands in a one-to-one correspondence to a target word. Of course, most sentences are not that easy to align. In Genesis 1,2, for example, we find WXCK, aligned to WXCWK> <L, while <L should be aligned to the (first part of) <L PNJ.

In this case, we can see that the preposition is not aligned correctly, but that words with semantic information still are correctly aligned. However, most of these prepositional inconsistencies between Hebrew and Syriac are correctly aligned. For example for sentence pair 320, where the Hebrew MMYRJM (one unit consisting of a preposition and substantive) is aligned correctly to the Syriac MN MYRJN= , which equally consists of a preposition and substantive, but this time separated into two words.

![]()

This method can also be used to trace interesting differences between the Syriac translation and the Hebrew original, which we will use in our further research involving Colibri Core. For example sentence pair 369, where the Hebrew JHWH is linked to two Syriac words, >BRM MRJ>. Only the second alignment is correct, where the word >BRM in Syriac does not have a source word in Hebrew. This is an example where the Peshitta mentions Abraham, but the Hebrew does not.

Up until this point, we have not yet paid attention to the wide number of base texts we can use. We will keep a more detailed discussion of the differences resulting from choices of including/excluding vowel signs, diacritics and the like for the next blogpost, where we will discuss the relevance of these differences in textual input for the n-gram patterns discovered by ColibriCore.