As August 2018 approaches, I enter my eighth year of full-time biblical/theological research. Even before that, a trove of the scholarly world had been available to me as I flipped open James Strong’s 1894 Exhaustive Concordance of the Bible. In this formidable compendium of every word in the Bible, Strong made it possible for casual readers to deepen their understanding of thousands of words in the Bible. Today, the many shortcomings of Strong’s concordance are well known (mainly its outdated definitions and problematic lexemes). Even so, the enduring use of Strong’s concordance today (especially online) testifies to the value of open data. Until recently, it was some of the best openly available data for Old Testament research. When I discovered that the ETCBC had begun releasing Hebrew data in 2014 with SHEBANQ and LAF-Fabric, I jumped at the opportunity to augment my research. As a result, I have been experimenting with the data for a few years now and so I hope to offer an explanation (and exhortation) for the value of open data under three broad headings that build towards what I have been building; parabible.

1. Open Data can be Transformed

When data is locked away in proprietary files formats and can only be accessed through proprietary software, the data’s usefulness is constrained. A morphologically tagged corpus in a typical software program might allow users to look up values and even search them in some way but users hit an impasse the moment they want to do something a software developer hasn’t thought of or implemented.

Transformation of data can sound esoteric or unnecessary. I would argue the contrary for two reasons. First, the difficulty users ordinarily face when trying or hoping to transform data (data they have purchased the rights to use) sets up boundaries of what is possible in our imaginations. Transformable data encourages creative thinking with regards to how to analyse and even visualise it. Second, transformation of data is important because it enables both the enrichment and exploration of data beyond initial expectations and ideas.

Recently I came across formal association measures that can be used to rank the significance of collocations (specifically the “MI” or, “Mutual Information” measure). Because the ETCBC’s Hebrew data is generously licenced, I was able to quickly put together an analysis using the MI measure by transforming the data into sets that I needed to run the MI calculation. If the data had been closed, I would not have been able to do this research. You can see my experiment with MI here, which also showcases another great feature – this research is easy to document, study and repeat.

2. Open Data can be Enriched

The Hebrew data that the ETCBC has encoded millions of features of the Hebrew Bible. In itself, this is a trove of information about morphology and syntax to explore. There are a number of features that are absent, though. Some are simply augmentations of existing data but some are completely new.

One of my professors wanted to search for all the occurrences of כִּי with a Gershayim accent. This can of course be done by examining vocalised, accented words as they appears in the text. The openness of the ETCBC data meant that I was able to augment it though, and add features for “accent name” (like “Gershayim”) and “accent type” (whether “conjunctive” or “disjunctive”). It would be trivial to add accent rankings as an additional feature (the code to add this data is on github). Now, searching for כִּי + Gershayim is trivial and we can search for any accent combination. We can also start looking for anomalies in the Hebrew Bible. For example, we could search for disjunctive accents on nouns in construct. This is made possible because the ETCBC data is open and supports enrichment.

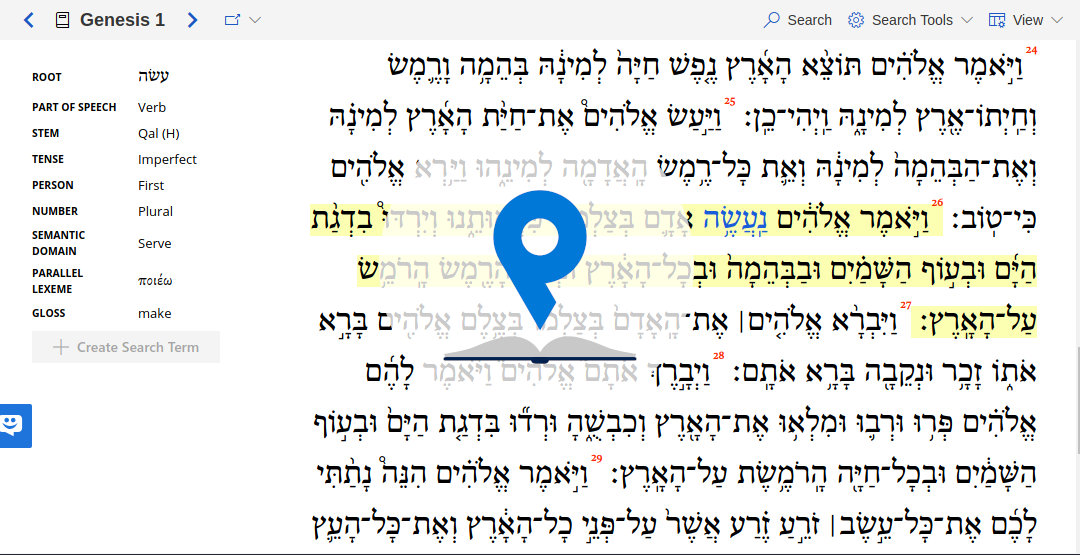

Some enrichment is more than simply an augmentation of data that is already in the data set. Another way that I have enriched the ETCBC data is by adding a “Semantic Domain” and “LXX Lexeme” as features on every word. I did this by gathering generously licensed data from elsewhere and lining it up with the ETCBC format (the code for these enrichments is also available on github). Admittedly, much work needs to be done on these additional features but as a first step it is promising. With this first step, when I come across a hapax legomenon in Hebrew, I am now able to search for other instances of the associated LXX Lexeme. With semantic domains at our fingertips, we can now look up verbs that have deities as their subject. Again, these enrichments are only possible because the ETCBC opened their data.

3. Open Data can be Explored

Already, I have been exploring data in this post. The MI association measure and searching for כִּי with a Gershayim are both examples of data exploration. But what has made me most excited about open data with the ETCBC is that I can explore it in whatever ways I can imagine. I experimented for a while with Text-Fabric, the ETCBC’s data format and querying standard. It didn’t take long for me to realise, however, that the complex types of queries that Text-Fabric was designed to support were well beyond what I was trying to accomplish. Text-Fabric also needs a fair bit of RAM and I found that I was bringing my laptop to a screeching halt when I tried to load up lots of features.

I wanted to be able to read the Hebrew Bible, notice an interesting feature and then find similar structures. What I was really looking for then, was a way to quickly build queries based on words that I was looking at. The web provided a perfect platform for reading and selecting relevant words. Clicking on words could allow me to look up features. Finally, clicking on features could allow me to build up queries and find similar sentences, clauses or phrases. With this in mind, I created parabible.

Parabible uses the ETCBC’s Hebrew morpho-syntactical data to allow users can navigate through and read the Bible in its original languages and then quickly build queries for individual words or simple syntax. Over time I’ve had the chance to line up other translations (an LXX text and the NET) and I’ve added the SBL GNT with its critical apparatus. Hopefully the next steps involve doing more with the syntax structures but that’s for another post. You can get an idea of what is already possible by watching the gif below.

Parabible search in action. Try out the same search yourself: https://parabible.com/Ezekiel/36#25

James, thanks for expressing these values in a way that any computing humanist will recognise. Indeed, Text-Fabric is a monster engine, but with it you can produce smaller, friendly engines for dedicated tasks. Your Parabible is an elegant example (although I wonder whether you used TF to create it). And, as a very basic example, Camil Staps’s Hebrew reader comes to mind: https://reader.hebrewtools.org .